We all generate a lot of information in our lives and in our work:

contacts, events, emails, tasks, photos, plans, budgets, financial records, media collections…

Computers are supposed to help us organise it all. So how’s that working out?

For the most part, we use application programs. Each application program works with information of a particular kind, with a polished and specific user interface. However, it fixes a particular schema for that information, and it is typically difficult to combine information from multiple applications.

Alternatively, we can work with information more loosely and flexibly in a spreadsheet. However, this provides a more limited user interface experience, and the very looseness makes it difficult to reason abstractly about the type and schema of the information.

Pinafore is an attempt to reimagine how computers represent and store information, and how users interact with it. It allows users to create their own schemas for information, and create their own interfaces to it.

This 0.1 release includes some of the major pieces: a type system for information, a language, a storage system, some composable user interface elements. Enough to get the gist of the project, and perhaps suggest some future possibilities. But much more needs to be done.

Language

Pinafore is an interpreted language. Information is stored as predicate/subject/object triples in a database in your home directory, and the user interfaces are created with GTK+.

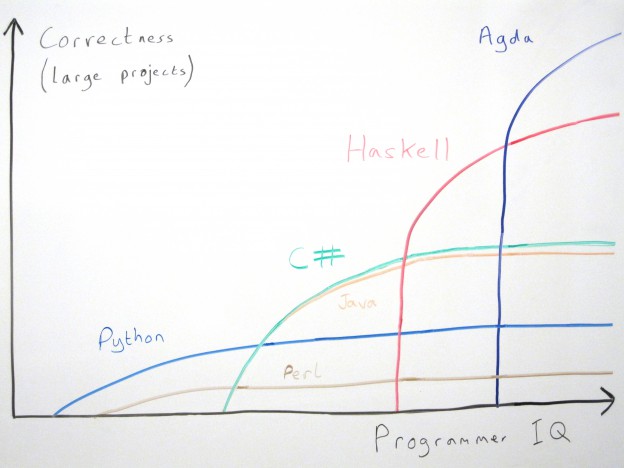

Pinafore generally resembles Haskell. It has a type system derived from Hindley-Milner, and features pattern-matching, lazy evaluation, and separation of pure functions from executable actions. There are some differences however:

- There is no “top level”. A Pinafore file consists of a single expression. Type declarations, like bindings, are declared within

letexpressions. - Layout is not syntactically significant. Instead, lines are terminated by semicolons, and

doandcaseexpressions are terminated with theendkeyword. - The colon is used for type signatures, while the double colon is used for list construction, the other way around from Haskell.

- Line comments start with

#, while (nestable) block comments are marked with{#and#}. - Only one equation is allowed for a function definition. Argument patterns can be matched with

caseexpressions.

Of course, there are many features of Haskell that Pinafore lacks, and vice versa.

Type System

Pinafore is a strongly-typed language. The type system implements Stephen Dolan’s Algebraic Subtyping, which is an extension of Hindley-Milner to allow subtyping. This type system is decidable: if an expression has a type, Pinafore can always infer a principal type for it. Like Haskell, however, you can also add type signatures to definitions.

A subtype relation P <: Q is a relationship between two types, “P is a subtype of Q”, which simply means “every P is a Q”, or “allow a P where a Q is expected”. Of course, this implies an inclusion function P → Q that actually converts the P to the Q. These functions do not have to be injective, nor does there need to be any kind of reverse function Q → Maybe P, though these do exist in Pinafore in some cases.

Two types are equivalent if each is a subtype of the other.

Polarity

The type system distinguishes positive and negative types. This is necessary, because certain type operations are only permitted with certain polarities:

- A positive type is a type that can appear in a positive position. Think of this as the type of a value you’ve defined in your program. The type signature of a value is such a positive position.

- A negative type is a type that can appear in a negative position. Think of this as the type of acceptance of values, such as the argument of a function type (that is itself positive).

- An ambipolar type is a type that is both positive and negative. This includes simple types such as

Text,Integer, and so forth. - If

PandQare positive types, thenP | Qis a positive type. You can read this as “aPor aQ, not telling you which”. As you might expect:P <: P | QQ <: P | Q- If

P <: RandQ <: R, thenP | Q <: R.

- If

PandQare negative types, thenP & Qis a negative type. Think of this as “must be both aPand aQ“. Likewise:P & Q <: PP & Q <: Q- If

R <: PandR <: Q, thenR <: P & Q.

Noneis a positive type, that is empty (and is a subtype of every type).None | P=P.Anyis a negative type, that accepts anything (and is a supertype of every type).Any & P=P.

Here are some examples of expressions with the principal types that Pinafore will infer. Note that Pinafore uses a single rather than double colon for type signatures:

\x -> 3: Any -> Integer

Nothing: Maybe None

\b -> if b then "hi" else 3: Boolean -> (Text | Integer)

\x -> x + textlength x: (Integer & Text) -> IntegerType Constructors

Like Haskell, Pinafore has type constructors such as Maybe, [] (list), (,) (pair), -> (function) and so on. But all type parameters must be types (i.e. as if of Haskell’s kind *): in addition, each parameter must be either covariant or contravariant. This gives subtype relations. For example, suppose F is a type constructor with one argument:

- If

Fis covariant in its argument, then,F xhas the same polarity asx.P <: QimpliesF P <: F Q.

- If

Fis contravariant in its argument, then,F xhas the opposite polarity asx.

-

P <: QimpliesF Q <: F P.

Of course, some types are, morally, neither contravariant nor covariant in their arguments. For these we use a pair of type parameters in a special syntax, one contravariant (marked with -) and one covariant (marked with +). For example, the WholeRef type constructor represents references with get and set operations:

WholeRef {-p,+q}

get: WholeRef {-p,+q} -> Action q

(:=): WholeRef {-p,+q} -> p -> Action ()Pinafore has some abbreviations to make working with these a little easier, e.g. WholeRef T = WholeRef {-T,+T}, and WholeRef +T = WholeRef {-Any,+T}, etc.

Recursive Types

Pinafore has “equirecursive” types, written in the form rec v. T, where T is a type expression where v appears only covariantly. The key fact of recursive types is that they are equivalent to their unrolling. For example, these two types are equivalent:

rec a. (a, Maybe a)

(rec a. (a, Maybe a), Maybe (rec a. (a, Maybe a))Recursive types are necessary for principality (that all typeable expressions have a principal type), though they’re not much used in practice.

Data Types

Pinafore allows you to create your own algebraic data types, like the data keyword in Haskell. Here’s an example:

datatype StopwatchState = StoppedState Duration | RunningState Time;Typed Storage

Pinafore stores “knowledge” as relationships between entities of various types. The relationship types are called morphisms, which can be composed as the name suggests.

Types of entities are all subtypes of the Entity type. These include:

- Literal types for small pieces of data, such as

Integer,Number,Booleanetc. - Open entity types, that simply represent arbitrary points, declared with the

opentypekeyword. Values of open entity types can be declared statically (with an anchor) with theopenEntitykeyword, or generated at run-time with thenewOpenEntityfunction. - Closed entity types, that have constructors, declared with the

closedtypekeyword. These are similar to data types, except that each constructor has an anchor, and the contained types must themselves be entity types.

Anchors are 256-bit values usually hashed from a literal string in your program. Pinafore erases types when storing information in its storage: it does not store the structure of types nor does it store which values have which types. Instead, it uses anchors to identify information in storage.

Open Entity Example

Here’s an example. Let us suppose to store two relationships concerning people:

- The name of some person p is “James”.

- The mother of p is some person q.

Firstly, we will need a type for people. This can be an open entity type: it has no information of its own besides identity: all information about people comes from morphisms.

opentype Person;We also need properties for “name” and “mother”. We need to give these anchors, since this is what will identify them in storage, not the names of the language bindings we happen to use.

“Name” is a property from Person to Text, because the name of a person is text. We give it the anchor !"myschema.name".

“Mother” is a property from Person to Person, because the mother of a person is a person. We give it the anchor !"myschema.mother".

Properties are morphisms, so the type of them is a morphism type, indicated by ~>. In fact, properties generate morphisms: you can compose morphisms together that are strings of properties. In this case, you can compose these two to get a morphism for “name of mother”.

name: Person ~> Text;

name = property @Person @Text !"myschema.name";

mother: Person ~> Person;

mother = property @Person @Person !"myschema.mother";Now we need entities p and q. These might be generated at run-time or obtained elsewhere, but here we’ll declare them statically. Again, it is the anchor that identifies them in storage, not the bindings p and q.

p: Person;

p = openEntity @Person !"someperson";

q: Person;

q = openEntity @Person !"otherperson";Actually storing the relationships is an Action (similar to IO in Haskell). And like Haskell, Pinafore has do notation to make working with actions easier:

do

name !$ {p} := "James";

mother !$ {p} := q;

endThe !$ operator applies a morphism to reference to get another reference. Since p is an entity, not a reference, we must first convert it to a reference using “reference notation” {p}.

The := notation sets the value of a reference.

Here’s a typed breakdown of that first action:

p: Person

{p}: WholeRef +Person

name !$ {p}: WholeRef Text

name !$ {p} := "James": Action ()Here’s what it looks like put altogether:

let

opentype Person;

name: Person ~> Text;

name = property @Person @Text !"myschema.name";

mother: Person ~> Person;

mother = property @Person @Person !"myschema.mother";

p: Person;

p = openEntity @Person !"someperson";

q: Person;

q = openEntity @Person !"otherperson";

in do

name !$ {p} := "James";

mother !$ {p} := q;

endThis is a complete Pinafore program. Running it will store those two relations in Pinafore’s persistent storage.

Of course, we can combine the morphisms in other ways:

# everyone who's mother's name is Kate

(name !. mother) !@ {"Kate"}

# the (name, mother) pair of p

(name !** mother) !$ {p}It is important to note that Pinafore does not store entities per se, it stores relations between entities. One cannot, for example, retrieve all entities of type Person. Types of entities such as Person are erased for storage. However, one can retrieve all entities that have name “James”, or whose mother is some entity q.

Closed Entity Types

Closed entity types resemble data types, but they are all subtypes of Entity, and so can be stored. Every constructor of a closed entity type includes an anchor, to identify that constructor in storage. Here’s an example:

closedtype CelestialLocation

= EquatorialLoc Number Number !"EquatorialLoc"

| EclipticLoc Number Number !"EclipticLoc";Reinterpretability

Any item of information retrieved from storage can be “unknown”, and Pinafore is robust with regards to what it happens to find in storage. This gives a certain amount of flexibility in modifying an existing “schema”, or system of entity types, without having to transform data in storage. For example, if you remove a property from your program, Pinafore will simply ignore that information in storage. If you add a property, Pinafore will initially find all values of that property to be “unknown”. Constructors can be added and removed from closed entity types. If Pinafore finds something it doesn’t recognise or cannot parse as it expects, it treats it as “unknown”.

Composable User Interface Models

In terms of the Model/View/Controller way of looking at user interface, the view and controller are represented by user interface elements (of type UI), while the model is represented by references (of type WholeRef, SetRef, and FiniteSetRef).

A reference represents the state of some thing. The user may wish to retrieve some part of that state, or make some change to it, or be notified when it changes. References are thus “live”: when connected to a UI element, the user can use the UI to change the reference, but also the reference can update the UI when its state changes.

- Whole references (

WholeRef) represent a single value (which might be “unknown”). There are operations for getting, setting, and deleting (making unknown). - Set references (

SetRef) represent some arbitrary set or predicate of some type. There are operations for adding and removing members, and for checking membership of some value. - Finite set references (

FiniteSetRef) are set references that have a finite number of members, that can be retrieved.

The various kinds of references can be composed in various ways, such as various set operations (union, intersection, Cartesian product and sum).

Pinafore’s “reference notation” makes working with whole references a little easier. For example, given two whole references to integers, we can create a new whole reference that is the sum of them:

p: WholeRef Integer;

q: WholeRef Integer;

pq: WholeRef +Integer;

pq = {%p + %q};Whenever p or q updates, then pq also updates. Of course, pq is read-only: attempts to set it will fail.

User Interface Elements

User interface elements are things such as text areas, buttons, check boxes, and tables. They are constructed from the references they control, and can be composed by horizontal or vertical layout, and put in windows.

Release

Version 0.1 of Pinafore is available from Github as a Debian package.

The Pinafore website has all the documentation.

— Ashley Yakeley